AWS PrivateLink

1. Introduction

AWS PrivateLink provides a highly secure and scalable solution for exposing services privately from one VPC to thousands of other VPCs. Unlike traditional VPC peering—which connects entire VPCs and requires modifications to route tables and other network configurations—PrivateLink isolates access to a specific service. This isolation minimizes the attack surface by preventing consumers from accessing resources beyond the exposed service. PrivateLink eliminates the need for internet gateways, NAT devices, or VPN connections, ensuring that all data traffic remains on the Amazon network. This chapter explores the mechanisms by which PrivateLink operates and the architectural decisions that make it an ideal solution for modern, distributed, and SaaS-based applications.

2. How AWS PrivateLink Works

AWS PrivateLink simplifies the secure connection between a service provider’s VPC and one or more consumer VPCs by leveraging several key AWS networking components. In this section, we explore the underlying architecture, the role of the Network Load Balancer (NLB), and the strategies for achieving high availability and fault tolerance.

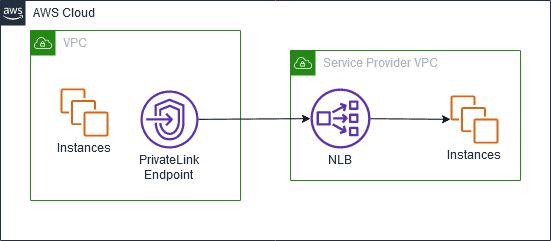

2.1. Architecture Overview

At the core of AWS PrivateLink is the concept of decoupling service exposure from full VPC connectivity. In a typical PrivateLink architecture, a service provider hosts its application services within its own VPC. These services are not exposed directly but are instead made accessible through a dedicated endpoint service. The service provider registers the application endpoints with a Network Load Balancer (NLB).

On the consumer side, an interface VPC endpoint is created. This endpoint provisions Elastic Network Interfaces (ENIs) within the consumer’s VPC subnets, providing private IP addresses that serve as the entry point to the service provider’s application. All communication between the consumer and provider occurs over the AWS private network, ensuring that traffic is isolated from the public internet. This architecture supports both a single AWS environment and hybrid scenarios where services hosted on-premises are connected via Direct Connect to an edge VPC that fronts a PrivateLink-enabled NLB.

2.2. Role of Network Load Balancer (NLB)

The Network Load Balancer is a critical component in the PrivateLink architecture. It serves as the front door for the service provider’s application endpoints. Key technical aspects of the NLB in a PrivateLink deployment include:

- Registration of Service Endpoints: The provider registers the IP addresses of the application instances (or other compute resources) behind the NLB. This registration abstracts the actual service endpoints, allowing the NLB to efficiently route traffic.

- Private Connectivity: The NLB is deployed within a private subnet and is not exposed to the internet. This ensures that all incoming traffic is routed securely through AWS’s internal network.

- Scalability and Performance: NLBs are designed to handle high volumes of traffic with low latency, making them well-suited for exposing services to thousands of consumer VPCs.

- Security Isolation: By funneling traffic through the NLB, the service provider limits the exposure of internal network details. Only the specific service endpoints registered with the NLB are accessible via PrivateLink.

2.3. High Availability and Fault Tolerance

To meet the demands of enterprise-grade applications, AWS PrivateLink is engineered for high availability and fault tolerance. Several design strategies ensure continuous service operation even in the event of component or Availability Zone (AZ) failures:

- Multi-AZ Deployments: The service provider can deploy both the application instances and the Network Load Balancer across multiple Availability Zones. This distributed setup ensures that if one AZ experiences an outage, the remaining AZs continue to handle traffic.

- Multiple ENIs in Consumer VPC: On the consumer side, creating interface endpoints in subnets across different AZs enhances redundancy. In the event that one ENI or AZ becomes unavailable, alternate ENIs maintain the private connectivity.

- Fault-Tolerant Routing: The underlying AWS networking infrastructure, combined with the inherent resilience of the NLB, ensures that traffic is automatically rerouted around failures, maintaining uninterrupted service availability.

3. Exposing Your Services with PrivateLink

Exposing services securely to multiple consumer VPCs requires careful configuration on both the service provider and consumer sides. This section outlines the technical steps and configurations needed to deploy AWS PrivateLink effectively.

3.1. Service Provider Configuration (Provider VPC)

For a service provider, AWS PrivateLink offers a structured way to expose specific application services while keeping the remainder of the VPC isolated. The key steps in configuring the provider side include:

- Hosting the Application Service: The provider deploys the application within its own VPC, ensuring that the service is robust and scalable.

- Deploying a Network Load Balancer: A private NLB is set up to act as the front end for the application service. The NLB is responsible for load balancing traffic across multiple instances or endpoints.

- Registering Service Endpoints: Application instances or specific IP addresses are registered with the NLB. In a multi-AZ configuration, endpoints are spread across different subnets to enhance availability.

- Creating the VPC Endpoint Service: The provider then configures a VPC endpoint service that makes the application accessible via PrivateLink. This endpoint service is advertised to consumer VPCs, which then create interface endpoints to access the service.

- Hybrid Connectivity Considerations: In scenarios where the application is hosted on-premises, the provider can extend the PrivateLink architecture by deploying an edge VPC with a NLB and establishing connectivity using AWS Direct Connect.

3.2. Consumer VPC Setup and ENI Management

On the consumer side, accessing a service through AWS PrivateLink is streamlined and does not require extensive changes to the existing VPC configuration. The essential steps include:

- Creating an Interface VPC Endpoint: The consumer initiates the setup by creating a VPC interface endpoint that connects to the provider’s endpoint service. This process automatically provisions one or more ENIs in the consumer VPC.

- Provisioning ENIs: The created ENIs receive private IP addresses from the consumer subnet, ensuring that all traffic between the consumer and provider remains within the AWS network.

- Seamless Integration: Since the connection is established through the ENIs, there is no need to modify the consumer’s route tables, nor is an internet gateway or NAT device required. This minimizes the configuration overhead and reduces the potential for misconfigurations.

- Unidirectional Connectivity: Traffic is initiated only from the consumer VPC to the provider service. This one-way connectivity model enhances security by ensuring that the provider’s VPC is not permitted to access additional resources within the consumer VPC.

4. PrivateLink vs. VPC Peering

Choosing between AWS PrivateLink and VPC peering requires an understanding of the specific network requirements and security considerations of your application. The following points outline the key differences and decision factors:

- Scope of Connectivity:

- VPC Peering: Establishes a full-mesh network connection between two VPCs, allowing bidirectional communication across all resources. This open connectivity can lead to broader security implications.

- PrivateLink: Restricts connectivity to a specific service, ensuring that only the exposed endpoints are accessible from the consumer VPC. This isolation limits the attack surface and enhances overall security.

- Security Considerations:

- VPC Peering: Requires extensive management of route tables and security groups, as the entire network is accessible once a peering connection is established. It is inherently bidirectional, meaning both VPCs can initiate traffic.

- PrivateLink: Enforces a unidirectional flow where only the consumer can initiate connections to the provider’s service. This controlled access model is ideal for SaaS applications where the provider does not need to access resources in the consumer VPC.

- Scalability:

- VPC Peering: Typically limited by the number of peering connections (up to 125 per VPC), making it less suitable for scenarios where a service must be exposed to thousands of VPCs.

- PrivateLink: Designed to support thousands of consumer VPCs, providing significant scalability benefits for SaaS providers.

- Network Complexity:

- VPC Peering: Often necessitates changes to route tables, as well as the configuration of internet gateways or NAT devices, which can introduce additional complexity.

- PrivateLink: Operates without the need for these modifications, offering a simpler and more streamlined approach to establishing private connectivity.

- CIDR Overlap:

- VPC Peering: Cannot be established between VPCs with overlapping CIDR blocks, which can be a limitation in certain network designs.

- PrivateLink: Is not affected by overlapping CIDRs, allowing for flexible network designs even when address spaces conflict.

5. Conclusion

AWS PrivateLink represents a paradigm shift in how services are securely exposed and consumed in the cloud. By leveraging a combination of private Network Load Balancers and interface endpoints, PrivateLink offers a highly secure, scalable, and fault-tolerant alternative to traditional VPC peering. Its architecture isolates service access to the specific endpoints intended for exposure, dramatically reducing security risks and administrative overhead. Moreover, the design supports multi-AZ deployments and thousands of consumer connections, making it ideal for modern SaaS applications and complex network environments.