Amazon CloudFront

1. Introduction

In today’s digital age, end-user experience is paramount. Speed, responsiveness, and security are critical for modern web applications. A Content Delivery Network (CDN) is an overlay network designed to reduce latency and improve the performance of content delivery by caching and delivering content from servers located closer to the user. The traditional model—where user requests travel long distances to a centralized data center—can lead to delays, higher latency, and reduced application responsiveness. CloudFront overcomes these limitations by distributing content to multiple Edge Locations, ensuring that end users can access data quickly and efficiently regardless of their geographic location.

CloudFront is not just a caching mechanism; it is a comprehensive solution that provides:

- Global Distribution: With hundreds of Edge Locations spread across the world, CloudFront minimizes latency by serving content from a location nearest to the end user.

- Scalability and Performance: CloudFront leverages the massive scale of AWS infrastructure to handle millions of requests per second while maintaining high throughput.

- Security: Integration with AWS Shield, AWS WAF, and features like Origin Access Control (OAC) enhance security by protecting against Distributed Denial of Service (DDoS) attacks and ensuring that only authenticated requests can reach your origin.

- Flexibility: CloudFront supports a variety of origin types—from Amazon S3 buckets and Application Load Balancers (ALB) to custom HTTP servers—and allows fine-grained control over caching behaviors.

- Cost Efficiency: By caching frequently accessed content at the edge, CloudFront helps reduce the load on your origins, which can result in cost savings for bandwidth and compute resources.

2. Core Concepts

This section provides an in-depth look at the foundational elements of Amazon CloudFront. We examine the overall architecture and operational flow, and then delve into the various types of origins that can be integrated with CloudFront.

2.1 CloudFront Overview: How It Works

CloudFront is designed around a two-tier caching hierarchy that optimizes content delivery at a global scale. To understand its inner workings, it is important to grasp the roles played by the two primary caching layers: the Edge Locations and the Regional Edge Caches.

2.1.1 The Two-Tier Caching Architecture

-

Edge Locations:

These are the first points of presence where content is served to end users. When a user sends a request for a file—such as an image, video, or web page—the request is routed to the nearest Edge Location based on network proximity and latency considerations. If the requested object is cached at the Edge Location, it is served immediately to the user, ensuring rapid response times. If not, the Edge Location forwards the request to a Regional Edge Cache. -

Regional Edge Caches:

Regional Edge Caches act as an intermediary layer between the Edge Locations and the origin. They hold a larger cache that can store less frequently requested objects for longer periods. If an Edge Location misses an object, it queries the Regional Edge Cache. Should the Regional Cache also miss, the request is then forwarded to the origin server (which may be an Amazon S3 bucket, an Application Load Balancer, or another HTTP server). Once the origin returns the requested content, it is cached at the Regional Edge Cache, then at the Edge Location, and finally delivered to the end user.

This multi-tier caching hierarchy not only reduces the load on origin servers by serving multiple users with the same cached object but also minimizes latency by ensuring that content is served from the closest possible location.

2.1.2 The Flow of a Request

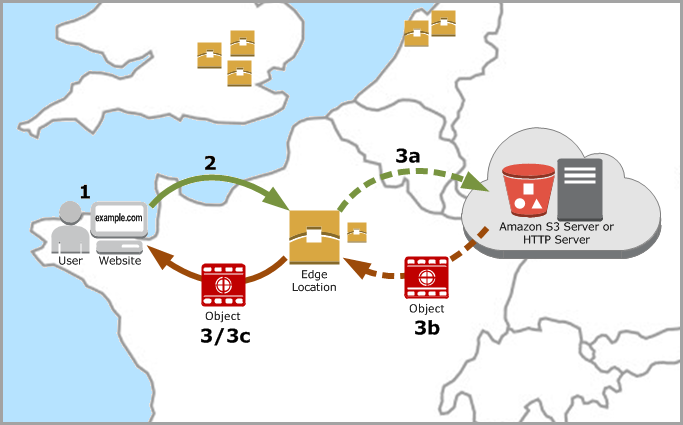

- A user accesses your website or application and sends a request for an object, such as an image file or an HTML file.

- DNS routes the request to the CloudFront POP (edge location) that can best serve the request, typically the nearest CloudFront POP in terms of latency.

- CloudFront checks its cache for the requested object. If the object is in the cache, CloudFront returns it to the user. If the object is not in the cache, CloudFront does the following:

- CloudFront compares the request with the specifications in your distribution and forwards the request to your origin server for the corresponding object—for example, to your Amazon S3 bucket or your HTTP server.

- The origin server sends the object back to the edge location.

- As soon as the first byte arrives from the origin, CloudFront begins to forward the object to the user. CloudFront also adds the object to the cache for the next time someone requests it.

This process is repeated and optimized across all regions, ensuring that popular content is cached at multiple layers of the network.

2.1.3 Benefits of the CloudFront Architecture

The two-tier caching system offers several advantages:

- Reduced Latency: By caching content closer to the end user, CloudFront drastically reduces the number of network hops required to serve content, leading to faster load times.

- Improved Availability: The distributed architecture means that even if one region or Edge Location experiences high traffic or a failure, other locations can continue to serve content.

- Load Reduction on Origins: By offloading repetitive content delivery to Edge Locations and Regional Caches, origins are spared from being overwhelmed by high request volumes.

- Enhanced Security: With caching distributed across a global network, CloudFront also provides a layer of defense against DDoS attacks. Even if the origin is targeted, many requests are absorbed by the distributed cache.

2.1.4 Integration with Other AWS Services

CloudFront is deeply integrated with other AWS services, creating a seamless experience for deploying globally distributed applications. Key integrations include:

-

Amazon S3:

CloudFront can be used to cache content stored in S3 buckets, whether those buckets serve as static website hosts or as secure storage for files. When used in combination with Origin Access Control (OAC), CloudFront ensures that only requests originating from the CDN can access the S3 bucket, greatly enhancing security. -

Elastic Load Balancing (ELB):

CloudFront works with Application Load Balancers (ALB) and Classic Load Balancers (CLB) to distribute dynamic content. This integration enables the CDN to manage the caching of dynamic web pages and API responses efficiently. -

AWS Certificate Manager (ACM):

SSL/TLS certificates can be managed via ACM, allowing for secure communication between viewers and CloudFront as well as between CloudFront and the origin. -

AWS Shield and AWS WAF:

CloudFront’s integration with AWS Shield and the AWS Web Application Firewall (WAF) provides additional layers of security, protecting your content delivery network from DDoS attacks and malicious traffic.

In summary, CloudFront’s core architecture—with its strategic combination of Edge Locations and Regional Edge Caches—ensures that content is delivered swiftly, securely, and reliably across the globe.

2.2 CloudFront Origins: S3, ALB, Custom Origins, and Multi-Origin Configurations

The flexibility of CloudFront is largely derived from its ability to integrate with a wide variety of origin sources. In this section, we explore the different origin types and the best practices for configuring them.

2.2.1 Amazon S3 as an Origin

Amazon S3 (Simple Storage Service) is one of the most common origins used with CloudFront. Whether serving static websites, downloadable files, or media content, S3 is highly scalable and reliable.

-

Enhanced Security with Origin Access Control:

CloudFront can be configured to work with S3 buckets using Origin Access Control (OAC)—previously known as Origin Access Identity (OAI). This mechanism ensures that objects stored in S3 can only be accessed through CloudFront, thereby preventing direct access via S3’s public endpoints. In a typical configuration, the bucket policy is set up to allow the OAC to perform GET operations while denying any public access attempts. -

Content Delivery for Static Websites:

When S3 is used to host static websites, it is important to enable Static Website Hosting on the bucket. CloudFront can then be positioned in front of the S3 website endpoint to deliver content securely and with reduced latency. -

Media Delivery:

CloudFront also supports S3 as an origin for media streaming applications, including Video on Demand (VoD) and live streaming through AWS Media Services. The caching capabilities of CloudFront are essential in reducing buffering and ensuring a smooth viewing experience.

2.2.2 Application Load Balancer and EC2 as Origins

For dynamic content and applications that require server-side processing, CloudFront can integrate with Application Load Balancers (ALB) and EC2 instances.

-

Using an Application Load Balancer:

An ALB distributes incoming traffic across multiple targets (such as EC2 instances) in one or more Availability Zones. When CloudFront uses an ALB as an origin, it is critical that the ALB is publicly accessible because CloudFront’s Edge Locations will fetch content via public IP addresses. Additionally, the ALB’s security groups must be configured to allow inbound requests from the known range of CloudFront IP addresses. -

Custom HTTP Origins on EC2:

CloudFront can also serve content from custom HTTP servers running on EC2 instances or even on-premises servers. Similar to ALB, these origins must be reachable publicly. The security configuration typically involves whitelisting the CloudFront IP addresses and configuring the server to expect certain headers or security tokens inserted by CloudFront. -

Multi-Origin Configurations:

In many scenarios, different types of content—such as static images, dynamic API responses, and personalized web pages—may be hosted on different origins. CloudFront supports configuring multiple origins within a single distribution by allowing you to define different cache behaviors based on the URL path pattern. For instance, requests to/images/*might be routed to an S3 bucket, while/api/*routes traffic to an ALB serving dynamic content. This flexibility enables you to optimize caching and security policies for each type of content.

2.2.3 Custom Origins and Their Use Cases

Custom origins refer to any HTTP/HTTPS server that is not part of the native AWS services such as S3 or ALB. These might include:

-

On-Premises Servers:

Organizations with legacy systems or specific compliance requirements might host content on their own servers. CloudFront can be used as a CDN in front of these on-premises origins to improve performance and provide additional security features. -

Third-Party Web Servers:

Even when using non-AWS hosting, CloudFront’s global network of Edge Locations can accelerate content delivery and reduce latency. -

API Endpoints:

When deploying RESTful APIs or GraphQL endpoints, using a custom origin allows you to leverage CloudFront’s caching and security capabilities to handle dynamic, non-cacheable content effectively.

2.2.4 Multi-Origin and Origin Groups

To maximize availability and resilience, CloudFront supports multi-origin configurations and origin groups:

-

Routing by Path Patterns:

As mentioned earlier, CloudFront allows you to define multiple origins within a distribution based on URL path patterns. This enables fine-grained control over which origin serves specific content, allowing for optimized cache behaviors and security settings. -

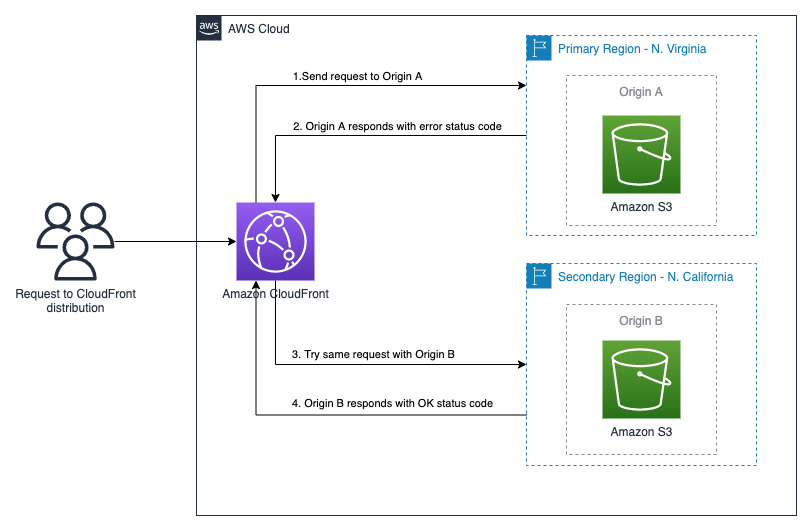

Origin Failover:

For mission-critical applications, you can configure origin groups where a primary and one or more secondary origins are defined. If the primary origin fails to respond appropriately—due to a network issue, an outage, or an internal error—CloudFront automatically fails over to a backup origin. This redundancy is essential for maintaining high availability and ensuring that end users are not affected by localized failures. -

Multi-Region Deployments:

In some cases, it is advantageous to deploy origins in multiple AWS regions. For example, you might use an S3 bucket in one region as your primary origin and another S3 bucket in a different region as a backup. With proper replication configured between the buckets, CloudFront can provide a highly available, multi-region content delivery solution.

In summary, the flexibility in configuring origins is one of CloudFront’s key strengths. Whether you are serving static content from an S3 bucket, dynamic content from an ALB, or custom content from an on-premises server, CloudFront’s architecture and configuration options allow you to tailor your delivery network to meet your specific performance, security, and reliability needs.

3. Origin Configuration and Security

A critical aspect of designing a robust CloudFront distribution is ensuring that your origins are not only optimized for performance but also secured against unauthorized access. This section explores techniques for customizing origin behavior through headers, securing origins with Origin Access Control (OAC) and AWS WAF, and implementing origin failover for high availability.

3.1 Customizing Origin Behavior with Headers

CloudFront provides the ability to insert and manipulate HTTP headers on requests that are forwarded from the CDN to the origin. This capability is essential for multiple reasons, including security, caching optimizations, and content personalization.

3.1.1 The Role of Custom Headers

Custom headers serve several key functions:

- Authentication and Authorization:

By inserting a secret header (for example,X-Origin-Verify), CloudFront can signal to the origin that the request has been vetted by the CDN. The origin can then enforce that only requests containing the correct header and value are allowed, effectively blocking any direct access attempts. - Cache Key Normalization:

Custom headers can be used to refine the cache key used by CloudFront. For instance, if you want to cache content based on specific device types, you might insert headers that indicate the user’s device, thereby allowing CloudFront to differentiate between requests. - Request Routing:

When multiple origins are configured, custom headers can be used to influence routing decisions. For example, certain headers might indicate that a request should be handled by a primary origin, while others could trigger failover to a secondary origin.

3.1.2 How Custom Headers Work in Practice

When a user sends a request to CloudFront, the request might already contain certain HTTP headers (such as user-agent or cookies). CloudFront allows you to add additional, fixed-value headers before the request is forwarded to the origin. This is accomplished by defining the header name and value as part of the cache behavior configuration. The origin then inspects these headers and applies its own security or business logic.

For example, consider a scenario where your origin should only accept requests that originate from CloudFront. You can configure CloudFront to insert a custom header (e.g., X-CloudFront-Secure: true) into every origin request. Your origin server, whether it is an ALB or a custom HTTP server, is then programmed to reject any request that does not contain this header. This two-layer security mechanism ensures that only traffic vetted by CloudFront reaches your origin.

3.1.3 Dynamic Versus Fixed Headers

CloudFront supports two types of header insertion:

- Fixed Headers:

These are predetermined values that do not change from request to request. They are ideal for authentication tokens or secret keys that must remain consistent. - Dynamic Headers:

Some headers can be generated based on attributes of the viewer’s request. For example, CloudFront can pass along headers that indicate the viewer’s device type, geographic location (such as city or country), or the HTTP version used. These dynamic headers can then be used by the origin to tailor responses or to enforce certain business rules.

3.1.4 Use Cases for Custom Header Insertion

The ability to customize headers opens up many possibilities:

- Identifying CloudFront Traffic:

Custom headers help origins distinguish between legitimate CloudFront requests and potentially malicious direct requests. - Enabling A/B Testing:

By inserting headers that indicate a particular user segment, origins can serve different content or vary caching strategies. - Optimizing Cache Performance:

Headers that identify specific viewer characteristics can help create more granular cache keys, ensuring that personalized content is delivered accurately without sacrificing caching efficiency.

In conclusion, custom headers are a powerful tool in the CloudFront arsenal. They not only enhance security by ensuring that only authorized traffic reaches the origin but also provide the flexibility needed to fine-tune caching and content delivery strategies.

3.2 Securing Origins: Origin Access Control (OAC) and AWS WAF Integration

Security is paramount when deploying a global CDN. In this section, we explore two critical security mechanisms: Origin Access Control (OAC) and AWS Web Application Firewall (WAF). Both of these features work together to ensure that your origin resources—be they S3 buckets or custom HTTP servers—are accessible only through CloudFront.

3.2.1 Securing S3 Origins with Origin Access Control (OAC)

When Amazon S3 is used as an origin for CloudFront, it is essential to prevent direct access to S3 objects from the public internet. Origin Access Control (OAC) is a mechanism that enforces this security by ensuring that the S3 bucket only accepts requests originating from a designated CloudFront distribution.

-

How OAC Works:

OAC is implemented by associating an identity (formerly known as Origin Access Identity or OAI) with a CloudFront distribution. The S3 bucket’s policy is then configured to grant read permissions exclusively to that identity. As a result, any attempt to bypass CloudFront and access the S3 bucket directly is denied. -

Enhanced Security Benefits:

This configuration not only protects the S3 bucket from unauthorized access but also reduces the risk of data exfiltration and unauthorized content scraping. It ensures that all requests are subject to CloudFront’s caching, logging, and security mechanisms.

3.2.2 Securing Custom Origins with AWS WAF and Custom Headers

Custom origins, such as those hosted on EC2 instances or on-premises servers, do not have the built-in access controls available with S3. To secure these origins, you can combine two strategies:

- Application-Level Security Using Custom Headers:

As described in Section 3.1, CloudFront can insert custom HTTP headers into requests. By configuring your origin to check for these headers and reject requests that do not contain them, you create a first layer of defense. - Network-Level Security with AWS WAF:

AWS Web Application Firewall (WAF) can be deployed in front of your custom origin—either directly integrated with an Application Load Balancer or as an additional layer in front of CloudFront. WAF allows you to define rules that block or allow traffic based on various characteristics (such as IP addresses, HTTP headers, or query strings). For example, you can create a WAF rule that only permits requests containing the correct custom header. This rule will block any attempt by malicious actors to access the origin directly.

3.2.3 Automated Key Rotation for Maximum Security

For enhanced security, especially when using custom headers for authentication, it is a best practice to periodically rotate the secret values. AWS Secrets Manager can be used to store these secret keys with an auto-rotation policy. A Lambda function (or another automation mechanism) can update the custom header value in both the CloudFront distribution and the WAF rules, ensuring that the key remains fresh and secure. This rotation minimizes the window of opportunity for an attacker to use a compromised key.

3.2.4 Summary of Origin Security Practices

To summarize, securing your origin in a CloudFront configuration involves:

- For S3 Origins:

Using OAC to ensure that only requests from CloudFront can access your S3 buckets. - For Custom Origins:

Using a combination of custom header insertion and AWS WAF rules to enforce access restrictions. - Additional Measures:

Implementing network-level security by whitelisting CloudFront IP ranges and automating key rotation to maintain a robust security posture.

3.3 Origin Failover: High Availability for Critical Workloads

In mission-critical applications, high availability is essential. CloudFront supports origin failover, ensuring that your application remains available even if the primary origin encounters an issue.

3.3.1 Configuring Origin Groups

An origin group is a configuration where two or more origins are linked in a failover relationship:

- Primary Origin:

The default origin that CloudFront uses to fetch content. - Secondary Origin:

A backup origin that CloudFront automatically falls back to if the primary origin fails to deliver the requested content, either because it is unreachable or returns an error status code.

By grouping origins in this way, you can create a resilient content delivery solution that withstands regional outages or localized failures.

3.3.2 Multi-Region and Multi-Provider Setups

High availability can be further enhanced by deploying origins in multiple regions or even across different service providers:

- Multi-Region Deployments:

For example, you might have an S3 bucket in one AWS region serving as the primary origin and a second S3 bucket in another region as the backup. With data replication configured between the two buckets, CloudFront can seamlessly switch to the backup if the primary becomes unavailable. - Combining Different Origin Types:

In some architectures, you might combine an ALB-based dynamic origin with an S3-based static origin. In such cases, CloudFront can be configured to fail over from the ALB to the S3 bucket if the dynamic content service encounters issues.

3.3.3 Failover Mechanisms and Health Checks

CloudFront uses health checks to monitor the availability of each origin within an origin group. These health checks ensure that if the primary origin fails, the system quickly identifies the issue and routes traffic to a healthy backup:

- Automatic Failover:

If a health check indicates that the primary origin is returning errors or is unresponsive, CloudFront automatically fails over to the secondary origin without manual intervention. - Configurable Criteria:

The failover criteria—such as the number of consecutive failed health checks or specific HTTP error codes—can be customized to suit the needs of your application.

3.3.4 Best Practices for High Availability

To achieve maximum resilience, consider the following best practices:

- Redundancy:

Always have at least one backup origin configured. For critical workloads, consider multiple backup origins. - Regular Health Check Monitoring:

Continuously monitor health check results and set up alerts to be notified of any anomalies. - Geographic Distribution:

Deploy origins in multiple geographic regions to mitigate the impact of regional outages. - Consistent Data Replication:

For S3-based origins, ensure that data is consistently replicated between regions so that failover can occur without content discrepancies.

By leveraging origin failover mechanisms, you can maintain uninterrupted service for your global audience even in the face of localized origin failures.

4. Encryption and Secure Delivery

Security is a fundamental concern when delivering content over the internet. CloudFront provides multiple mechanisms to ensure that the connection between the client and the origin is secure. In this section, we explore the practices and technologies involved in enforcing HTTPS and establishing end-to-end encryption.

4.1 Enforcing HTTPS: SSL/TLS Certificates and Viewer Protocol Policies

Securing the connection between the end user (viewer) and CloudFront is the first step in ensuring data integrity and confidentiality. HTTPS (using SSL/TLS) is the industry standard for securing web traffic.

4.1.1 Viewer Protocol Policy

The Viewer Protocol Policy in CloudFront dictates how the viewer’s browser communicates with the Edge Location:

- Allow All:

Both HTTP and HTTPS requests are accepted. While this policy offers maximum compatibility, it may not provide the necessary level of security. - Redirect HTTP to HTTPS:

In this configuration, any HTTP request is automatically redirected to HTTPS, ensuring that all traffic is encrypted. - HTTPS Only:

Only HTTPS requests are accepted, and any HTTP request is rejected. This policy provides the highest level of security, as it guarantees that all connections are encrypted.

For modern applications, especially those handling sensitive data or requiring regulatory compliance, using HTTPS Only is generally recommended.

4.1.2 Origin Protocol Policy

The Origin Protocol Policy controls how CloudFront communicates with the origin server:

- HTTP Only:

Content is fetched over unencrypted HTTP. This option is only advisable if the origin is trusted and if encryption is not a concern. - HTTPS Only:

CloudFront establishes a secure connection with the origin using HTTPS. This is recommended to maintain end-to-end encryption. - Match Viewer:

CloudFront uses the same protocol to connect to the origin as was used by the viewer. This provides a flexible option that can balance security and performance based on the viewer’s request.

4.1.3 SSL/TLS Certificates and Alternate Domain Names

For HTTPS to work correctly, valid SSL/TLS certificates must be in place:

- Default CloudFront Certificate:

CloudFront provides a default certificate (e.g.,*.cloudfront.net) which allows you to quickly get started with HTTPS. - Custom Certificates:

If you wish to use your own domain name (such aswww.example.com), you can import a certificate via AWS Certificate Manager (ACM) or upload a third-party certificate. When using custom domain names, you need to configure Alternate Domain Names (CNAMEs) in your CloudFront distribution. - Dedicated IP Versus SNI:

CloudFront supports both Server Name Indication (SNI) and dedicated IP address options for SSL/TLS. SNI is recommended for most scenarios as it is more cost effective and scalable.

4.1.4 Security Policies and Cipher Suites

To further harden HTTPS connections, CloudFront allows you to specify security policies that define:

- Minimum TLS Version:

Ensure that only modern, secure versions of TLS (such as TLS 1.2 or TLS 1.3) are accepted. - Cipher Suites:

Specify a list of acceptable ciphers to prevent weak encryption algorithms from being used.

By configuring these policies, you can ensure that all connections are secured using the strongest available encryption methods.

4.2 End-to-End Encryption: Origin-to-Viewer Data Security

While enforcing HTTPS between the viewer and CloudFront is crucial, ensuring that the entire data path—from the origin server to the viewer—is encrypted is equally important. End-to-end encryption ensures that data is protected at every step.

4.2.1 The Need for End-to-End Encryption

In many applications, sensitive information may be transmitted between the client and the origin. End-to-end encryption guarantees that data remains confidential and tamper-proof as it traverses multiple networks, including the public internet and AWS’s internal network.

4.2.2 Configuring End-to-End Encryption

To achieve end-to-end encryption:

- Origin Configuration:

The origin must be configured to accept HTTPS connections and to present a valid SSL/TLS certificate. For Application Load Balancers (ALB) or custom origins, this means installing a certificate from ACM or a trusted third party. - Certificate Matching:

The hostname specified in the CloudFront origin configuration must match the Common Name (CN) or Subject Alternative Name (SAN) on the certificate installed at the origin. - Handling Self-Signed Certificates:

Although self-signed certificates can be used on EC2 instances, they are not recommended for production environments. Instead, use certificates issued by trusted Certificate Authorities (CAs).

4.2.3 Challenges and Considerations

Implementing end-to-end encryption can present challenges:

- Certificate Management:

Certificates must be renewed and rotated before they expire. AWS Certificate Manager (ACM) can automate this process for supported domains. - Legacy Systems:

Some older systems might not support the latest TLS versions, necessitating careful planning to maintain compatibility without compromising security. - Performance Impact:

While encryption introduces a slight overhead, the impact is usually negligible compared to the benefits in security. CloudFront’s optimized network helps mitigate any performance penalties.

4.2.4 Summary of Encryption Best Practices

For a robust, secure CloudFront deployment, it is recommended to:

- Enforce HTTPS between the viewer and CloudFront.

- Use HTTPS for the connection between CloudFront and the origin.

- Employ modern TLS versions and secure cipher suites.

- Manage certificates proactively using ACM or other certificate management tools.

By implementing these practices, you can ensure that all data exchanged between the origin and the viewer remains secure from interception or tampering.

5. Content Customization and Access Control

Amazon CloudFront offers advanced features that allow you to customize content delivery and enforce access controls based on geographic location or through edge computing. In this section, we explore geographic restrictions and edge computing capabilities provided by CloudFront Functions and Lambda@Edge.

5.1 Geographic Restrictions: Blocking or Allowing Traffic by Location

Geographic restrictions are a powerful feature that lets you control access to your content based on the location of the viewer. This functionality is useful for complying with legal regulations, managing content licensing, or enforcing regional business policies.

5.1.1 How Geo Restriction Works

CloudFront can restrict access by comparing the viewer’s IP address against a database that maps IP ranges to countries. There are two primary modes:

- Allow List:

Only viewers from specified countries are permitted to access the content. All other requests are blocked. - Block List:

Viewers from specified countries are blocked, while all others are allowed to access the content.

These settings are applied across the entire distribution, meaning that they affect every origin and cache behavior configured in the CloudFront distribution.

5.1.2 Use Cases for Geographic Restrictions

Geographic restrictions are commonly employed for:

- Content Licensing and Copyright Compliance:

Media companies can restrict access to content based on regional licensing agreements. - Regulatory Compliance:

Organizations operating in regulated industries can enforce regional restrictions to comply with local laws. - Targeted Marketing:

Businesses can tailor content delivery to specific regions, ensuring that users only see content relevant to their locale.

5.1.3 Configuring and Managing Geo Restrictions

Geo restrictions can be configured via the CloudFront management console, AWS CLI, or API. Administrators specify the list of allowed or blocked countries, and these settings are automatically propagated across the global network of Edge Locations.

In summary, geographic restrictions provide a simple yet effective way to control content access and ensure compliance with legal and business requirements.

5.2 Edge Computing

Edge computing extends CloudFront’s functionality beyond simple caching. By running code at the Edge, you can manipulate HTTP requests and responses, perform authentication and authorization, and even conduct real-time content transformations. CloudFront supports two primary models for edge computing: CloudFront Functions and Lambda@Edge.

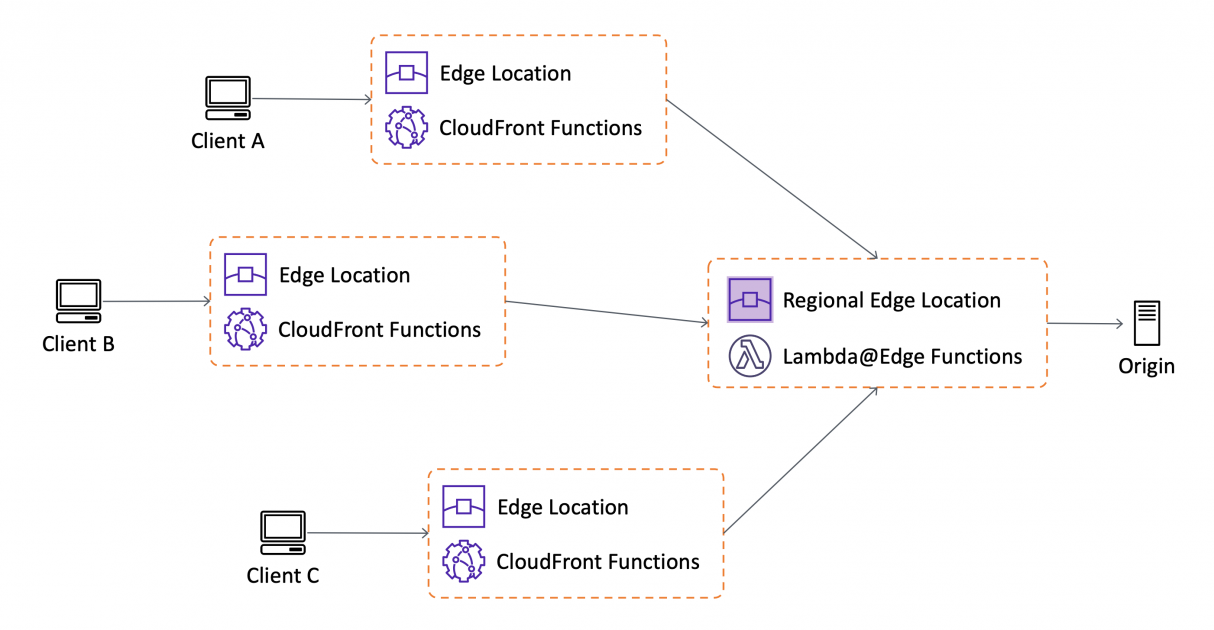

5.2.1 CloudFront Functions (Lightweight JavaScript at the Edge)

CloudFront Functions are designed for high-performance, latency-sensitive tasks that must be executed as close as possible to the viewer. They offer a lightweight execution environment for simple tasks such as header manipulation, URL rewrites, or cache key normalization.

5.2.1.1 Characteristics of CloudFront Functions

- Ultra-Low Latency:

CloudFront Functions have a sub-millisecond startup time, making them ideal for tasks that require minimal processing delay. - Process-Based Isolation:

These functions run in a highly optimized, isolated environment within the Edge Location. - Limited Execution Time and Memory:

With a maximum execution time of one millisecond and a memory limit of 2 MB, they are best suited for lightweight tasks. - No External Network Access:

CloudFront Functions are designed to be self-contained; they cannot make outbound network calls, ensuring that the processing remains fast and predictable.

5.2.1.2 Use Cases for CloudFront Functions

- Cache Key Normalization:

Adjusting the request parameters (such as headers, cookies, and query strings) to create an optimal cache key. - Request Rewrites and Redirects:

Modifying incoming URLs or redirecting requests based on specific conditions. - Token Validation:

Quickly inspecting authentication tokens (e.g., JWT) present in request headers to enforce authorization at the Edge.

These functions are typically deployed at the viewer request or viewer response stage, ensuring that modifications occur before the request reaches the origin or before the response is sent back to the client.

5.2.2 Lambda@Edge (Custom Code Execution for Advanced Workflows)

Lambda@Edge is a more robust solution for running custom code in response to CloudFront events. Unlike CloudFront Functions, Lambda@Edge supports a wider range of use cases and offers greater flexibility in terms of programming languages, execution time, and network access.

5.2.2.1 Characteristics of Lambda@Edge

- Language Support:

Functions can be written in Node.js or Python, enabling the use of complex logic and third-party libraries. - Extended Execution Time:

Lambda@Edge functions allow up to 5 seconds of execution time for viewer-triggered events and up to 30 seconds for origin-triggered events. - VM-Based Isolation:

Functions run in a virtual machine environment, which provides additional security and flexibility. - Network and File System Access:

Lambda@Edge functions can perform outbound network calls and interact with local temporary storage, enabling integration with other AWS services or external APIs. - Access to Request Bodies:

These functions have the ability to inspect and modify the full request payload, making them ideal for tasks such as content transformation or detailed authentication workflows.

5.2.2.2 Use Cases for Lambda@Edge

- Advanced Authentication and Authorization:

Validate complex tokens or query external authentication services to determine if a request should be permitted. - User-Agent Based Content Delivery:

Customize responses based on the client’s device type. For example, redirecting mobile users to lower resolution images to optimize bandwidth usage. - Dynamic Content Personalization:

Perform server-side personalization of web pages or API responses based on user profiles or geographic data. - Integrating with Backend Services:

Invoke additional AWS services (such as DynamoDB or API Gateway) to enrich the content served to the user.

5.2.3 Comparing CloudFront Functions and Lambda@Edge

To help you understand the difference between CloudFront Functions and Lambda@Edge, here’s a quick comparison:

| CloudFront Functions | Lambda@Edge | |

|---|---|---|

| Runtime support | JavaScript (ECMAScript 5.1 compliant) | Node.js, Python |

| Execution location | 218+ CloudFront Edge Locations | 13 CloudFront Regional Edge Caches |

| CloudFront triggers supported | Viewer request Viewer response | Viewer request Viewer response Origin request Origin response |

| Maximum execution time | Less than 1 millisecond | 5 seconds (viewer triggers) 30 seconds (origin triggers) |

| Maximum memory | 2MB | 128MB (viewer triggers) 10GB (origin triggers) |

| Total package size | 10 KB | 1 MB (viewer triggers) 50 MB (origin triggers) |

| Network access | No | Yes |

| File system access | No | Yes |

| Access to the request body | No | Yes |

| Pricing | Free tier available; charged per request | No free tier; charged per request and function duration |

In many architectures, you might use a combination of both approaches—CloudFront Functions for ultra-fast, simple tasks, and Lambda@Edge for more sophisticated processing.

6. Performance and Advanced Integrations

Optimizing the performance of your content delivery network is critical for maintaining a responsive and efficient user experience. In this section, we examine AWS Global Accelerator and cache optimization strategies that further enhance CloudFront’s performance.

6.1 AWS Global Accelerator: Improving Availability and Latency

AWS Global Accelerator is a networking service designed to improve the availability and performance of your applications by directing user traffic through the optimal AWS edge location. It works in tandem with CloudFront by leveraging the AWS global network.

6.1.1 How Global Accelerator Works

Global Accelerator uses Anycast IP addresses to ensure that user requests are automatically routed to the closest AWS edge location:

- Anycast IP:

Multiple edge locations share the same IP address. When a user sends a request, the global routing system directs the traffic to the nearest edge location based on network performance metrics. - Optimized Routing:

Once the request reaches the edge location, it is forwarded over the AWS private network to the application endpoint (such as an ALB or EC2 instance), bypassing the public internet and reducing latency.

6.1.2 Benefits of Global Accelerator

- Consistent Performance:

By routing traffic over AWS’s internal network, Global Accelerator minimizes latency and packet loss, ensuring a consistent experience for users regardless of their location. - Static IP Addresses:

Global Accelerator provides two static Anycast IP addresses that remain constant over time. This simplifies network configuration and DNS management, especially for applications that require a fixed IP address. - Rapid Failover:

With continuous health checks on the application endpoints, Global Accelerator automatically reroutes traffic to healthy endpoints in the event of an outage, providing fast and transparent failover. - Support for Non-HTTP Protocols:

Unlike CloudFront, which is optimized for HTTP and HTTPS, Global Accelerator supports TCP and UDP traffic. This makes it an ideal choice for gaming, VoIP, IoT, and other latency-sensitive applications that do not rely solely on HTTP.

6.1.3 Integrating Global Accelerator with CloudFront

Although Global Accelerator and CloudFront serve different use cases, they can complement each other:

- Use Case Differentiation:

CloudFront excels at caching and accelerating web content (both static and dynamic), while Global Accelerator focuses on routing and network performance improvements for any TCP/UDP-based application. - Combined Architecture:

In some scenarios, you might deploy CloudFront to cache content and use Global Accelerator to ensure that requests reach your origin with minimal latency, especially if your application is distributed across multiple regions.

6.1.4 Best Practices for Using Global Accelerator

- Endpoint Health Monitoring:

Regularly monitor the health of endpoints and configure appropriate thresholds for failover. - Static IP Whitelisting:

Since Global Accelerator provides static IP addresses, update any firewall or network configurations to allow traffic from these addresses. - Application-Level Redundancy:

Combine Global Accelerator with multi-region or multi-origin setups to further enhance the availability and resilience of your application.

6.1.5. Global Accelerator vs Cloudfront

| Feature | AWS CloudFront | AWS Global Accelerator |

|---|---|---|

| Use case | HTTP protocol | HTTP and non-HTTP protocols |

| Performance | Improves performance for both static and dynamic HTTP/S content | Improves performance for a wide range of applications over TCP or UDP |

| Static IPs | Uses multiple sets of dynamically changing IP addresses | Provides a fixed set of two static IP addresses |

| Edge Locations | Uses Edge locations to cache content | Uses Edge locations to find an optimal path to the nearest regional endpoint |

| Pricing | Based on data transfer out and HTTP requests | Charges fixed hourly fees plus data transfer rates |

| DDoS Protection | Provides DDoS Protection with AWS Shield | Provides DDoS Protection with AWS Shield |

6.2 Cache Optimization: TTLs, Invalidation, and Cache Policies

Efficient caching is the backbone of a high-performance CDN. In this section, we cover the principles of cache optimization, including Time-to-Live (TTL) settings, cache invalidation strategies, and the configuration of custom cache policies.

6.2.1 Understanding TTL and Cache Behaviors

- Time-to-Live (TTL):

TTL defines the duration for which an object is cached at an Edge Location or Regional Edge Cache. A well-configured TTL can reduce the number of requests to the origin and improve content delivery speed. - Cache Invalidation:

When content changes, you need to invalidate the cache to ensure that outdated content is not served. CloudFront supports manual invalidation requests, though it is best practice to design your cache behavior to minimize the need for frequent invalidations. - Custom Cache Policies:

CloudFront allows you to define custom cache policies that specify how query strings, headers, and cookies are used to build the cache key. This level of granularity ensures that you can cache content effectively while still delivering personalized content when necessary.

6.2.2 Strategies for Cache Optimization

- Segmentation of Content:

Different types of content (e.g., static images, dynamic API responses) may require different caching strategies. By configuring multiple cache behaviors within a single distribution, you can apply optimal TTLs and cache key policies to each content type. - Cache Key Normalization:

Normalizing the cache key by removing unnecessary query parameters or headers helps maximize the cache hit ratio. This can be done using CloudFront Functions or Lambda@Edge. - Automated Cache Invalidation:

In scenarios where content is updated frequently, consider implementing automated cache invalidation strategies to ensure that end users receive the latest content without manual intervention.

6.2.3 Monitoring and Tuning Cache Performance

- Metrics and Logging:

Use AWS CloudWatch metrics and CloudFront logs to monitor cache hit ratios, latency, and the frequency of origin requests. These insights help you identify areas for optimization. - Iterative Tuning:

Cache optimization is an ongoing process. Regularly review performance data and adjust TTLs, cache policies, and invalidation strategies to ensure that the caching layer remains efficient as your content and traffic patterns evolve.

By applying these cache optimization strategies, you can significantly reduce latency, decrease load on your origin, and improve the overall performance of your content delivery network.

7. Monitoring: Logging, Metrics, and Cost Alerts

Robust monitoring is essential to ensure that your CloudFront distribution is performing as expected and to detect any anomalies early. This section covers the various tools and techniques for monitoring CloudFront, from logging and metrics to setting up cost alerts.

7.1 CloudFront Access Logs

- Detailed Request Logging:

CloudFront can generate access logs that record detailed information about every user request. These logs include data such as the requester’s IP address, request timestamp, HTTP method, response status, and bytes served. This information is invaluable for troubleshooting, performance tuning, and forensic analysis. - Log Storage and Analysis:

Access logs can be stored in an Amazon S3 bucket, where they can be further analyzed using tools such as Amazon Athena or third-party log analysis solutions. Regular analysis of these logs helps in identifying trends, detecting abnormal traffic patterns, and understanding user behavior.

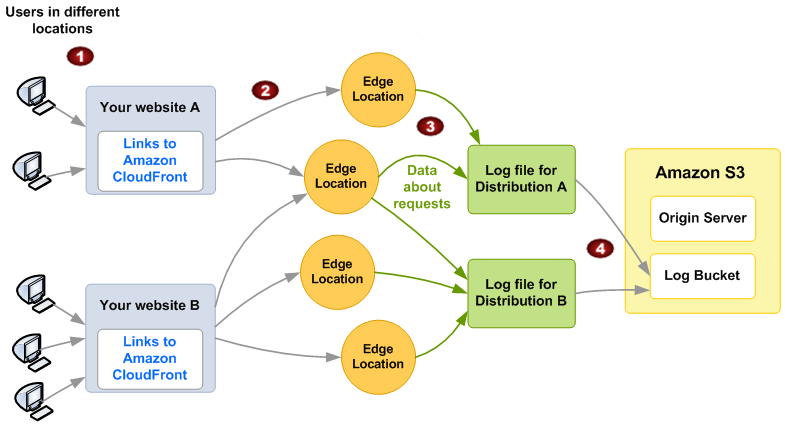

The following diagram shows how CloudFront logs information about requests for your objects. In this example, the distributions are configured to send access logs to an Amazon S3 bucket.

-

In this example, you have two websites, A and B, and two corresponding CloudFront distributions. Users request your objects using URLs that are associated with your distributions.

-

CloudFront routes each request to the appropriate edge location.

-

CloudFront writes data about each request to a log file specific to that distribution. In this example, information about requests related to Distribution A goes into a log file for Distribution A. Information about requests related to Distribution B goes into a log file for Distribution B.

-

CloudFront periodically saves the log file for a distribution in the Amazon S3 bucket that you specified when you enabled logging. CloudFront then starts saving information about subsequent requests in a new log file for the distribution.

7.2 Metrics and Dashboards

- AWS CloudWatch Integration:

CloudFront automatically publishes metrics to Amazon CloudWatch, allowing you to monitor key performance indicators (KPIs) such as cache hit ratio, latency, and error rates. CloudWatch dashboards can be customized to provide real-time insights into your distribution’s performance. - Custom Alarms:

Set up CloudWatch alarms to notify you when metrics exceed predefined thresholds. For example, you can create alarms for unusually high error rates or sudden drops in cache hit ratios, enabling you to respond quickly to potential issues.

7.3 Cost Monitoring and Alerts

- Tracking Data Transfer Costs:

With CloudFront’s global distribution, monitoring data transfer costs is essential. AWS Cost Explorer and CloudWatch metrics can help you track these costs over time, ensuring that you remain within budget. - Budget Alerts:

Configure AWS Budgets to receive alerts when spending approaches or exceeds a specified limit. This proactive approach helps prevent unexpected charges and ensures that you can optimize your distribution configuration to control costs.

7.4 Best Practices for Monitoring

- Regular Audits:

Periodically review CloudFront logs, metrics, and cost reports to identify any performance degradation or anomalies. - Automated Reporting:

Automate the generation of performance and cost reports to keep stakeholders informed and to facilitate proactive management of your content delivery network. - Integration with Incident Management:

Integrate CloudWatch alarms and logs with your incident management or on-call systems so that any issues are escalated and resolved in a timely manner.

By establishing a comprehensive monitoring and alerting framework, you ensure that your CloudFront deployment remains healthy, performant, and cost effective.

8. Conclusion

Amazon CloudFront is a versatile and robust solution for delivering content at scale. Whether you are hosting a static website, streaming media, or deploying a complex, globally distributed application, CloudFront provides the tools and integrations necessary to ensure that your content is delivered quickly, securely, and reliably.